AnyHome

Generating your dream home from any description.

The paper is hosted on arXiv. The source code can be found on Github.

Update (Jul 1, 2024): It is my greatest honor to announce that our paper has been accepted to ECCV 2024! 16 months of hard work does pay off. I have now equipped myself with fruitful experience in crafting a paper from scracth to publishing to a top conference, including ideation, experiements, paper-writing, and submission. I am grateful for working with Rao in this project, who has been a great mentor and friend to me. This “pipeline-level” paper is just my first try in academic research, to find a novel topic that I’m interested in and work on it with passion. I am looking forward to extend my journey in academic research, and to try my best to make groundbreaking works (like Transformers and ResNet) in the future, no matter how long it’ll take.

The year of 2023 witnessed the booming of Artificial Intelligence Generated Content (AIGC), starting from the outbraek of ChatGPT. The huge potential of ChatGPT in promoting human being’s productivity prompted me to embark on a research about the Large Langueg Models. As time progressed, countless of AIGC models were developed, capable of synthesizing content with 1D (text, like ChatGPT) and 2D (images, like Stable Diffusion). However, the 3D content generation was still in its infancy, without a killer model to be widely adopted.

Will 3D generation be the next huge breakthrough and opportunity in AI? I kept pondering this question. Indeed, 3D representation had its innate advantages: it aligned with how human beings perceive the world. This representation is more intuitive and realistic, fostering a wide range of applications especially when the concept of MetaVerse became popular.

Then, Why not catch up with this potential breakthrough? I urged myself. Immediately, the thought of carrying out a research in 3D generation sparked my interest. My previous research experiences taught me their essence: they were a commitment to steadily accumulate knowledge and insights, enabling me to make meaningful contributions in the future. Researching AI would provide me with the opportunity to explore everything from classic theories to current trends by reading extensive literature, and to settle down and derive complex formulas and create different architectures. It’s one thing to understand how Transformers work through self-attention; it’s quite another to implement it yourself and see how each component, from positional embedding to skip connections, is indispensable through ablation studies.

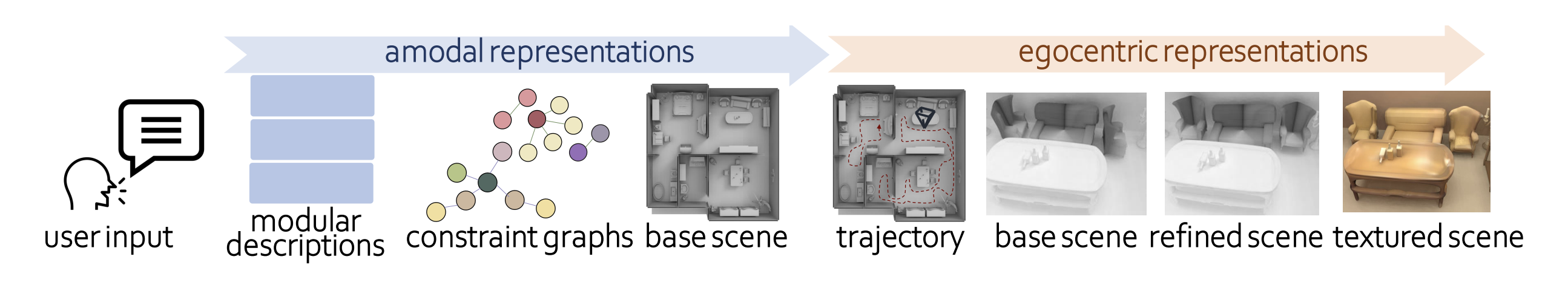

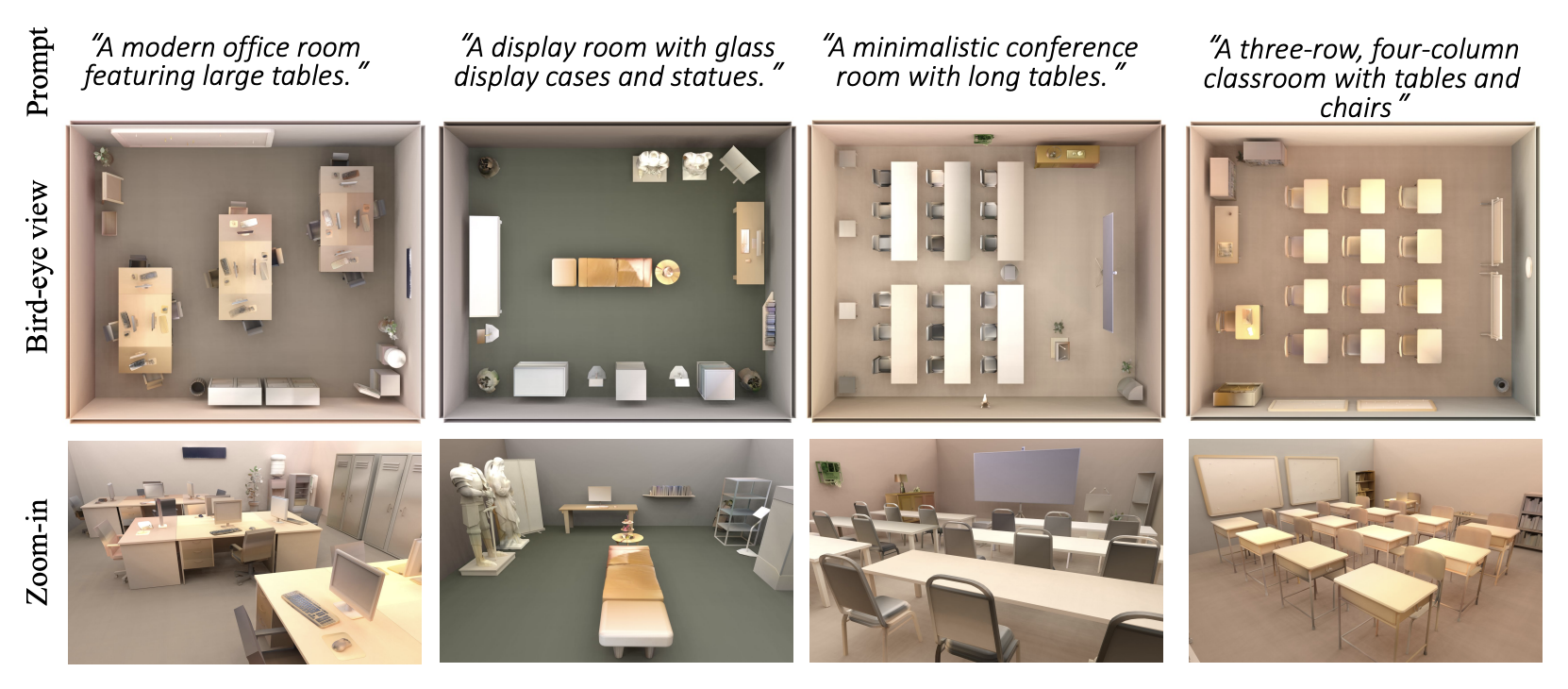

Fortunately, I met Rao Fu out of a coincidence during summer 2023, who became both my mentor and “comrade” for the following year till now. We started researching on text-guided, “egocentric” indoor scene generation, as we both believed that mimicking the human’s “egocentric” perspective would be more intuitive and realistic, just like how we explore our home. As a heavy reader, I always found myself amazed by the vivid description of architecture in novels, whether it was the ancient eastern house from Dream of the Red Chamber or the magnificient mansion from The Great Gatsby, and I wondered if we could make the dream home in the novel come true. That’s why our project was named “AnyHome”.

Every academic research project begins with a literature review, a period both tedious and fruitful, as it introduces researchers to their domain. Rao assigned me and my collaborator, Zichen Liu, over 30 papers to read over the weekend. We spent our time in a cafe, from ten in the morning until eight in the evening, reading and discussing the papers. We summarized each one and analyzed its pros and cons (an example of our notes can be found here). Looking back, it was a significant accomplishment. There was a genuine desire for knowledge between us: we undertook the research not just to publish papers, but to truly learn about the field. This desire allowed us to engage deeply and continuously without being distracted or feeling impatient, teaching me the importance and benefits of having a benign motivation.

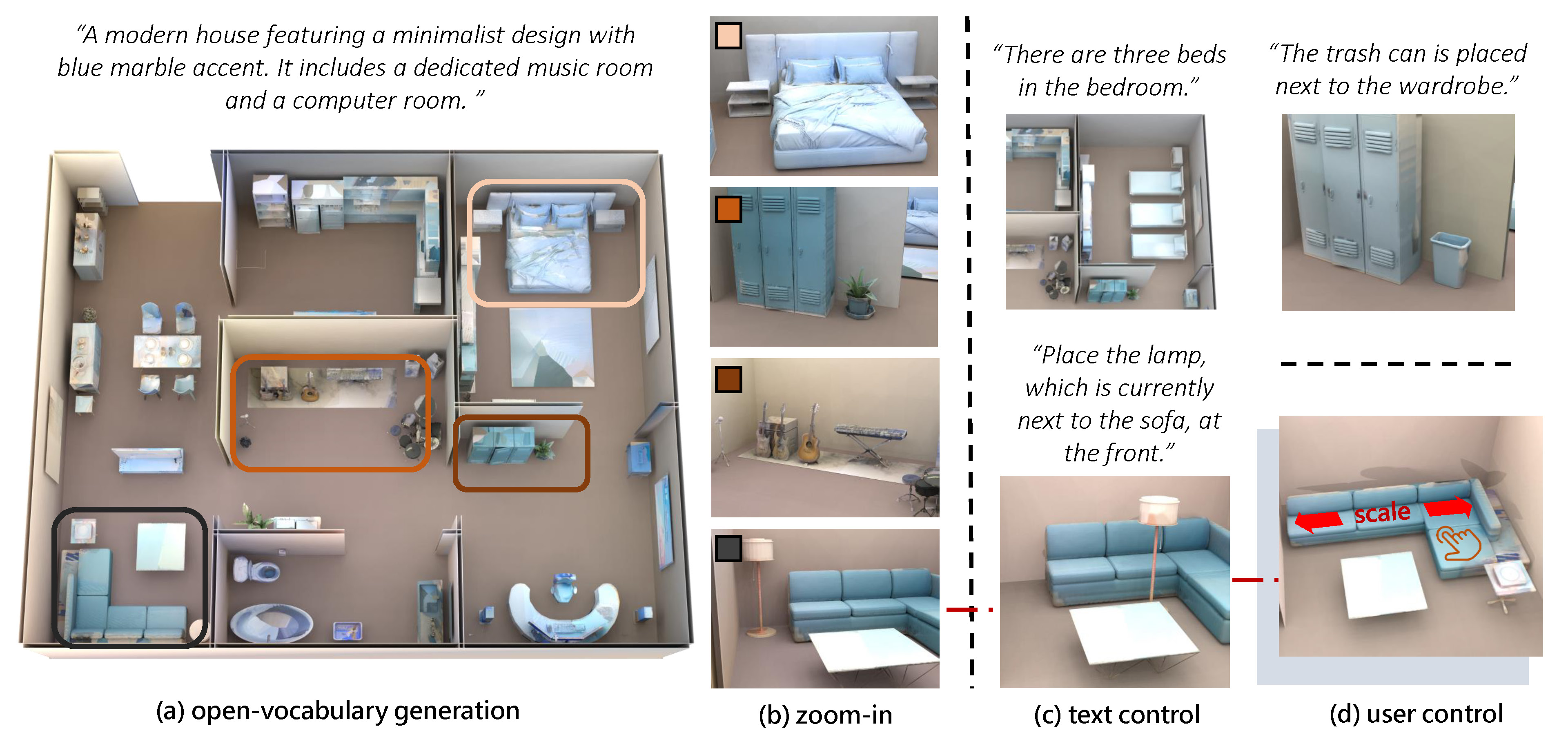

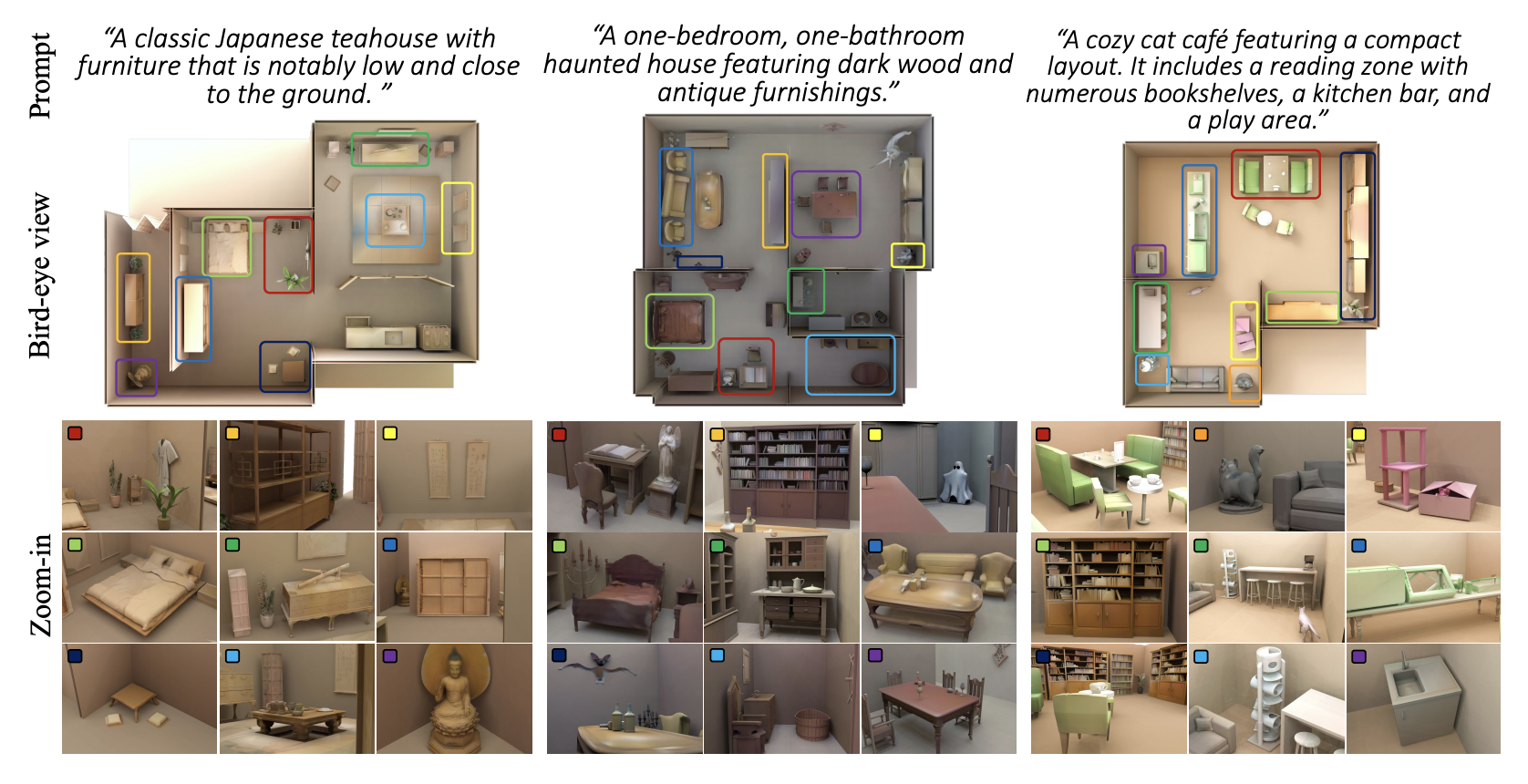

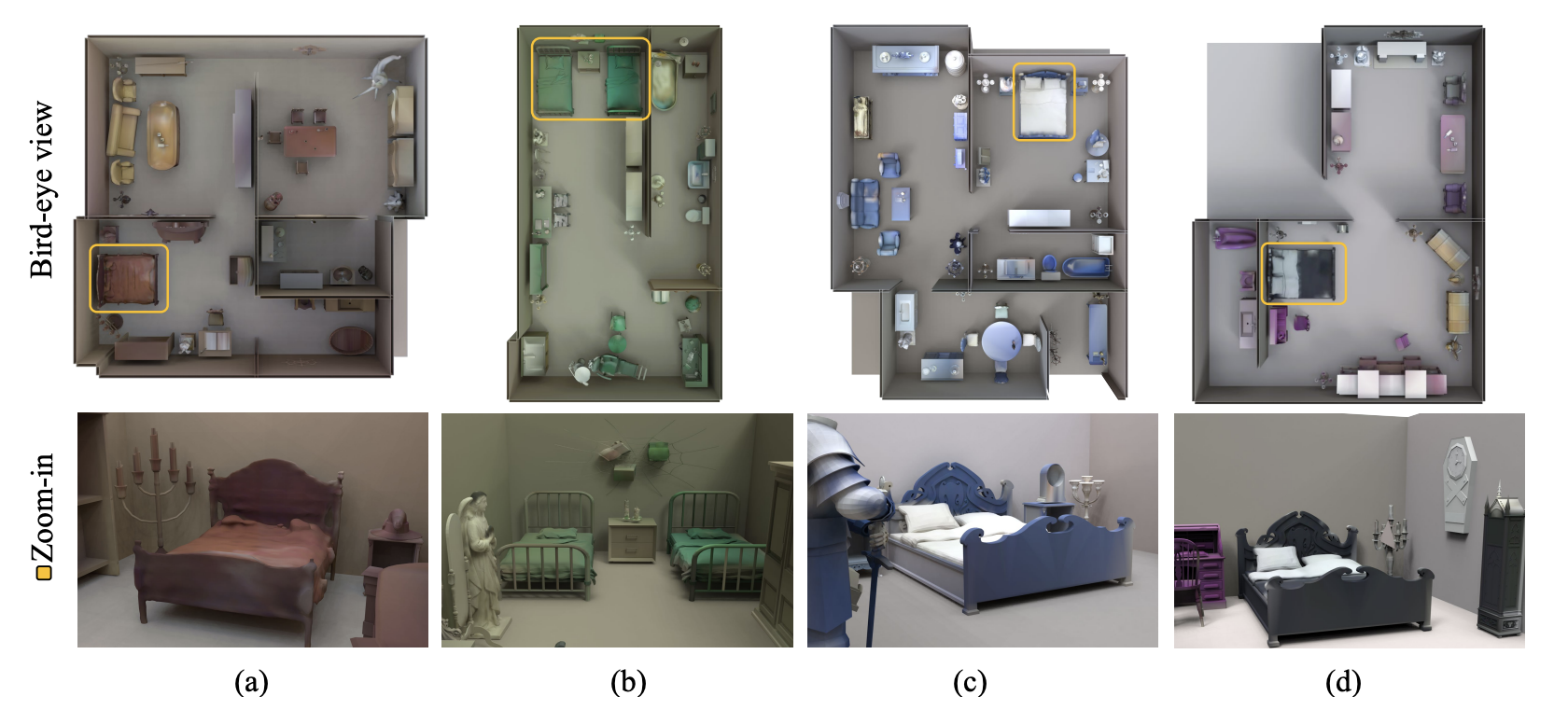

We kept up with the hard work, and finally, crafted the pipeline of AnyHome. Taking a free-form textual input, it generates the house-scale scene by: (i) comprehending and elaborating on the user’s textual input through querying an LLM with templated prompts; (ii) converting textual descriptions into structured geometry using intermediate representations like graphs; (iii) employing an SDS process with a differentiable renderer to refine object placements; and (iv) applying depth-conditioned texture inpainting for egocentric texture generation. I won’t go into the details here; you can find the complete technical description in our paper.

My experience with AnyHome provided deeper insights into research itself. Initially, I underestimated the complexity of research methodologies, fooled by the simplicity with which their creators seemed to explain their motivation and justification in a few sentences. However, I learned that a single sentence in a paper could represent the culmination of countless hours of reading, discussing, and experimenting, along with numerous failures and pivots. In the AnyHome project, I was responsible for the entire structured mesh generation and part of the egocentric in-painting. This work spanned half of my 10th grade and all of 11th grade, marked by many unexpected challenges. Initially, we considered using text-to-image models combined with depth estimation for mesh synthesis (similar to Text2Room), but after seven months, we realized this approach was ineffective and shifted to using depth-conditioned models with texture back-propagation. This cycle of trial, error, and adaptation repeated, making each achievement significant and worth celebrating. Through this process, I gained hands-on insights into various aspects of scene generation, learning the intricacies and dirty tasks in the field. Moreover, this enduring research journey profoundly shaped my resilience, teaching me that true innovation results not from isolated successes but from the perseverance to navigate and overcome numerous challenges and frustrations.

The time we spent did pay off. The paper was orally presented at the New England Computer Vision Workshop (NECV 2023), gaining compliments from the audience for its innovative approach and interesting results. Yet, what astonished me was when we decided to submit the paper to Conference on Computer Vision and Pattern Recognition (CVPR 2024). My notion for the conference was that it was the “olympiad” for computer vision researchers, bearing countless foundational methodologies like ResNet and Faster R-CNN. I couldn’t believe that me, a high school student, could submit a paper to such a prestigious conferece.

Submitting the paper to a top conference was exciting but also nerve-wracking. Everything, from the paper’s format to the submission system, was new to me, requiring meticulous attention to every detail. As I worked on creating compelling visualizations and fine-tuning the wording to convey our ideas more effectively, I gradually became familiar with the tools and conventions of academic presentation. This experience laid a solid foundation for my future research endeavors, which I envision pursuing throughout my life. I distinctly remember the night before the submission deadline, staying up until 4 a.m., echoing the Kobe Bryant famous quote of seeing LA at 4 a.m.. During the Zoom meeting with Rao and Zichen that lasted for a whole day, each of us focused on our tasks, I felt a strong sense of unity and belonging, as if we were “comrades” united in achieving a common goal.

Although the paper was rejected by CVPR, the experience proved invaluable. I gained insights not only into academic processes and the field of 3D/scene generation but also into the nature of research and its valuable qualities. The past year of research at AnyHome marked a significant period of growth, as I transitioned from the mindset of a high school student to that of a resilient researcher.

Failure is the start of success. We are reflecting on the feedback from the reviewers and are preparing to submit the paper to other conferences. We are also working on the open-source codebase, hoping to make it more accessible to the community. I am grateful for the opportunity to work on this project, and I am looking forward to the future research and development in the field of AI.

Sweet Notes

Really happy that we’ve got the 2nd citation in the paper today. It’s a great honor to be recognized by the community. (April 6, 2024)

Fourth Google Scholar citation! (June 3, 2024)